Here's a question:

How did we go from room-sized calculators to attempting to create human intelligence using 1s and 0s?

Let's Rewind...

Before Common Era

The story of software development starts all the way back in ancient Egypt in 2700 BCE, when the abacus was first used by everyone to calculate math problems. Fast forward to 2600 years in 90 BCE, the ancient greeks used and evolved the abacus and two other inventions, most notably "Antikythera Mechanism" which was the first analogue computer created. They used it for various time-based calculations such as predicting the phases of the moon and tracking the four-year cycle of the Olympic games.

Modern Era

2000 years later in 1898, computers are still used for astronomical calculations but now these computers are actual women who helped successful astronomers compute their calculations. The method they used to pass data between them while they each see the calculation was the first instance of pipeline architecture.

Actual First Electronic Computer

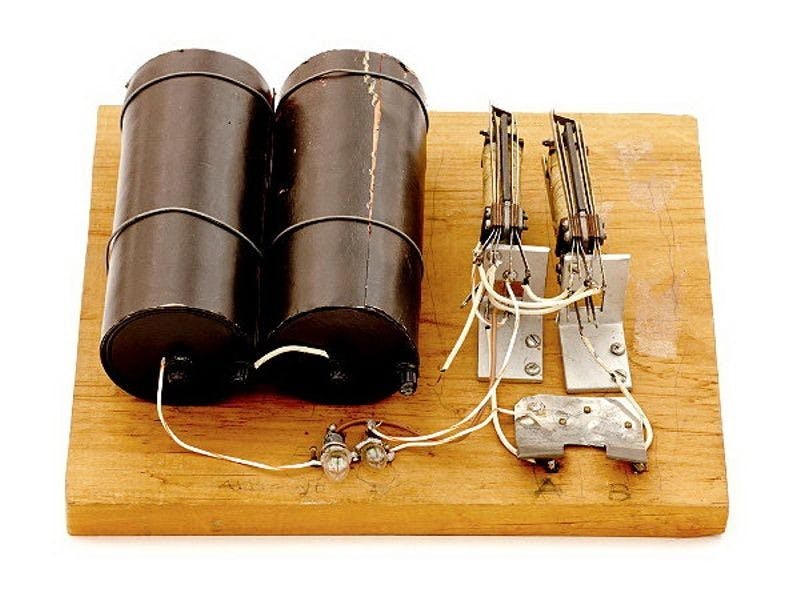

In 1937 digital computing was invented by George Snippets who applied George Boole's research and light relays to create the first digital computer, known as K-Model. Named after the kitchen table on which it was created.

Idea of Software came into existence

In the 1940s as WW2 raged on so did the sphere of Computer Science. In America, Grace Hopper conceived the idea that software is something of itself creating the compiler which turned high-level orders into machine language. Across the Atlantic in England, Alan Turing was creating the theories of modern Computer Science while in Germany Konrad Zuse created the first high-order programming language and the first program-controlled computer known as "Z3".

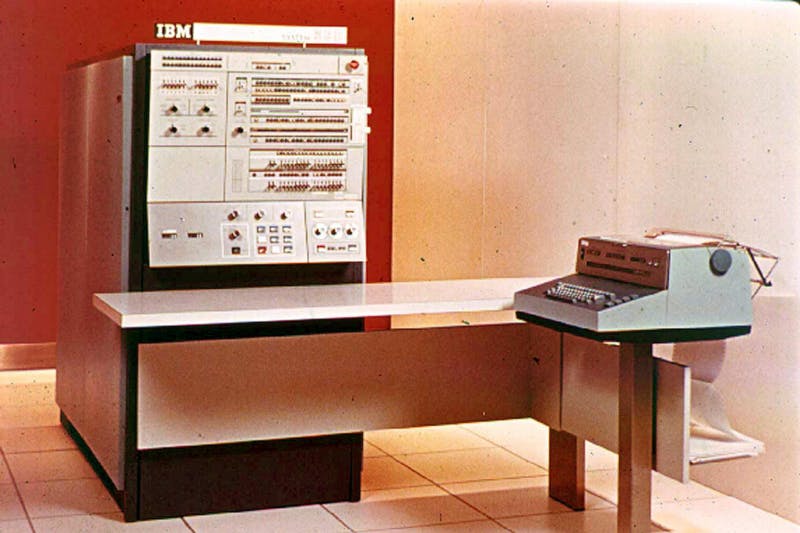

In 1963, Margaret Hamilton coined the term "Software Engineering" first and used it to refer her work from that of her colleagues to the hardware engineers of NASA. Then in the late 1960s, with the ideas of Grace Hopper, IBM System/360 was created which was the first system to allow outside software to be developed in it. Thus, new companies being able to develop software independently.

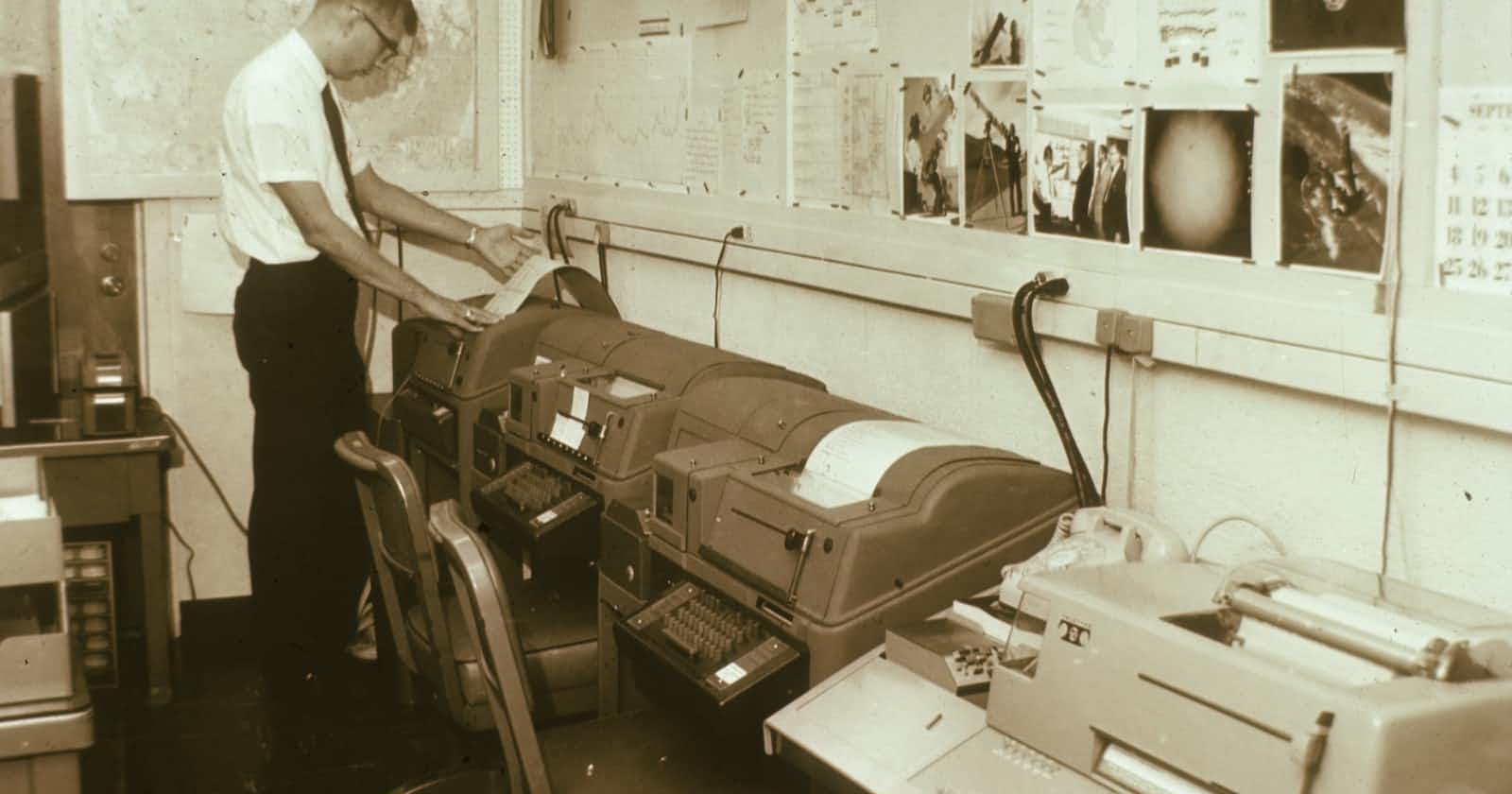

Around this time, Software Engineering was really kicking off but with one small big problem. Computers were still the size of rooms and were more valuable than the developers who worked on them.

First Programmable Personal Computer is Created

In the early 1970s, several personal computers were created making computers cheaper and more widely available. In the same decade, we start to have an explosion of revolutionary new practices in Software Engineering.

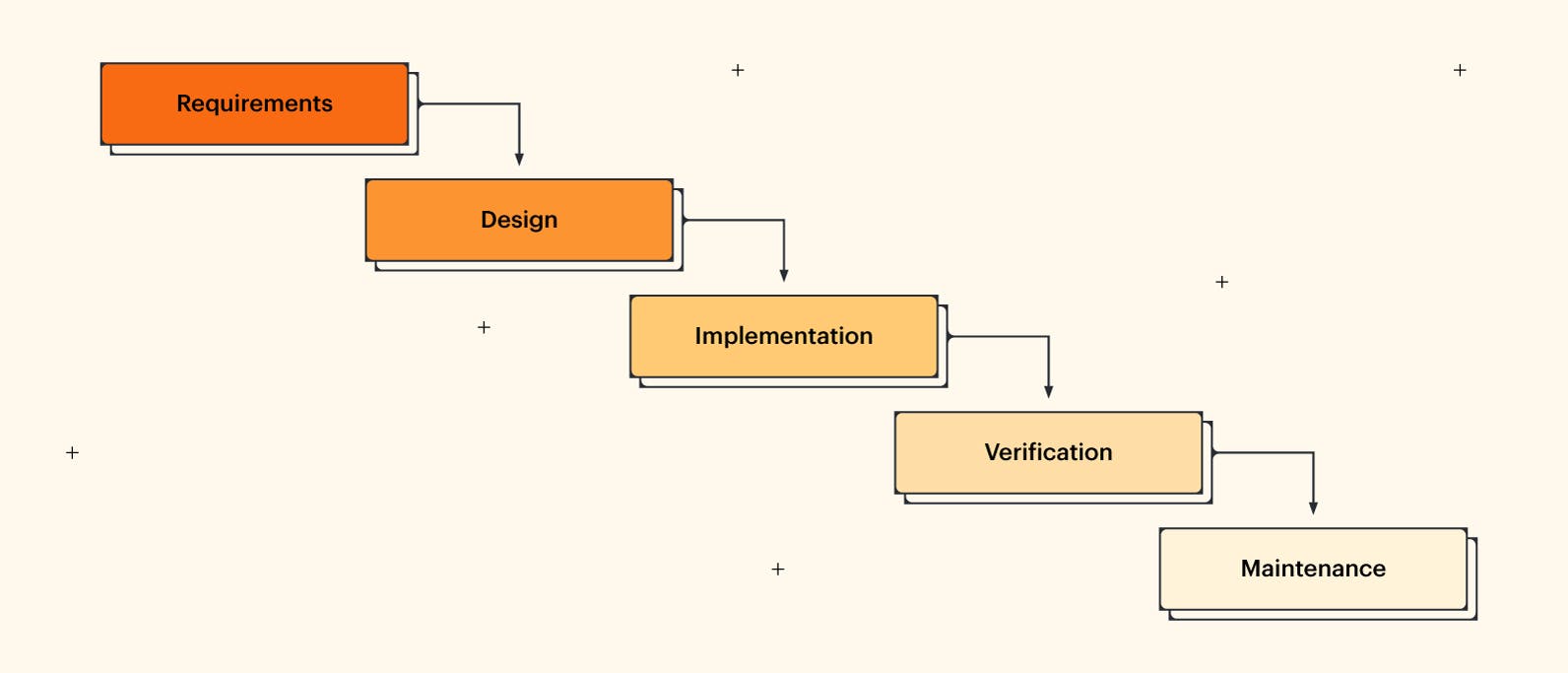

First, in 1974, Larry Constantine was the first to read about Modular Programming and Coupling and Edsger Dijkstra gave us the idea of Structured Programming in his book "Structured Programming", in 1972. In the late 1970s, Robert Floyd and Toney Horace formed ways to connect reasoning and programming greatly, connecting Computer Science and Software Engineering. Also in the same decade, Winston Royce is credited with giving us a more formal software development process, The Waterfall Methodology.

Golden Age of Software Engineering

After all these new advances, in the 1980s Software Engineering enters its Golden Age.

In the early 1980s Maestro 1, the first Integrated Development Environment developed by Softlab Munich plays a prominent role in Software Engineering as well as Object Oriented Programming Languages such as C and C++. In 1983, the popular ADA Programming Language was developed as a safe High-Level Object Oriented Programming Language by Dr. Jean Ichbiah.

Bill Gates and Microsoft released Windows 1.0 in 1985, Apple was already in the market at this time having released their first computer 10 Years earlier in 1976. In the late 1980s case tools were used extensively to help support design methods.

Sir Tim Berners-Lee invented the World Wide Web in 1989 shortly after the first website was put online on august 6th 1991 called info.cern.ch which can still be visited today.

In 1993, the first user-friendly web browser known as "Netscape" came into popularity. Originally known as "Mosaic", Mark Anderson's work helped pave the way for what we know as the internet today.

21st Century

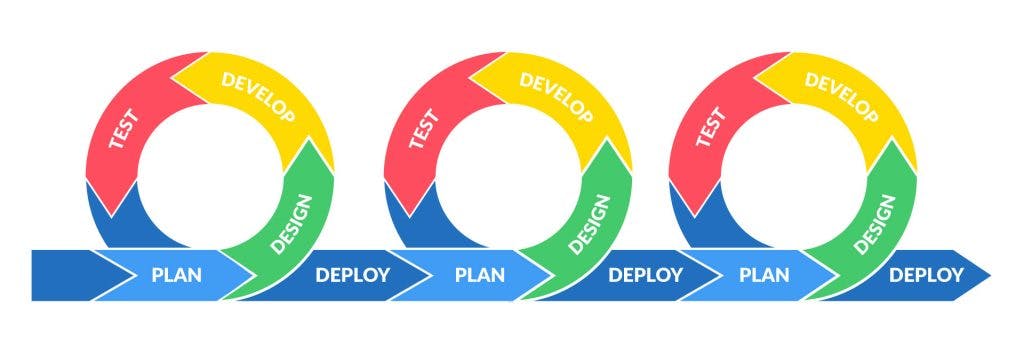

In the 2000s C# came along and became a competitor of Java. Python and Pearl were also becoming increasingly popular Programming Languages in the 2000s. The late 2000s brought us the first usage of Agile Methodology and many companies began experimenting with this new approach. Agile becomes mainstream and later being capable of handling larger system development in the early 2010s.

Some years later, Machine Learning finally takes off, programs that can teach themselves to recognize persons, create strategies and reach their own conclusion through trial and error are developed. This all happened in 100 years. Where will be from 100 years from now?

What next?

In the coming years, Software Development is going to use Artificial Intelligence to make many different types of Applications easier. From self-driving cars to providing room service the future of Artificial Intelligence and Software Development is limitless and exciting, we just have to be careful about the robot takeover XD

That's all for this blog, hope you enjoyed reading it :)